Anatomy of a Click and The Forgotten Sense!

By Adrian Hill, Automotive Business Development, Cirrus Logic

When was the last time you had your vision checked or used earplugs? We constantly tune our senses of sight and sound, savoring taste and smell in everyday moments like a great meal or the aroma of morning coffee. But what about touch?

Often considered the least significant of our senses, touch — particularly haptic feedback — is emerging as a pivotal player in our digital lives. No longer just a background sensation, haptics has the power to transform how we interact with technology. That game controller in your hand? It’s no longer just about buttons and joysticks — it’s about feeling the weight, texture, and resistance of virtual objects. Haptics turns the intangible into something you can physically experience.

Why is this critical? Because the next generation of personal electronics — smartphones, laptops, gaming controllers — are not just about visuals and sound. They’re about creating a truly immersive, multi-sensory experience where haptics plays the starring role. For everyday users, this may seem like an exciting upgrade, but for User Experience (UX) professionals, it’s more than that. Haptics isn’t just a feature — it’s a design imperative that can make or break the user experience.

Touch, powered by precise haptic feedback, is no longer a nice-to-have. It’s a key element in crafting intuitive, engaging, and responsive interfaces. If UX professionals are serious about staying ahead, haptics isn’t just a tool — it’s a science to be mastered.

클릭의 해부

Let’s take one of the most fundamental touch experiences we are familiar with. The “click”. Every time you press a button, tap a key or flick a switch your fingertip relays subconsciously to your brain that your action was successful. How? With haptic feedback. You instinctively know the feeling of a click and, moreover, you instinctively feel when something is not quite right.

Haptic feedback is an important — and often subconscious — form of feedback your brain receives. It provides tactile cues that, through proprioception, help the body and your fingertips to perceive their position, movement, and the forces acting on them.

So, with experience and proprioception, our brains already know what a good click feels like, but what about a bad click? With the advent of smart phones and touchscreen display interfaces, haptic feedback became an often-overlooked aspect of the User Interface (UI). When we focus on our phones or touchscreen displays, the brain can use visual feedback – you pressed it, it changed. Simple? Yes, until we realize that in some situations such as when driving, staring at our phones or a display is forbidden or even dangerous. That confirmation of action the brain is expecting — the ‘click’ — needs to be seamless, intuitive and without the distraction of searching a large screen for visual changes. The proprioception of touch matters.

Nowhere is this more important than when we enter the world of automotive UX.

Human Machine Interface (HMI) and UX professionals need to understand the anatomy of a click, and how touch/force systems and haptics feedback can provide the intuitive interface our fingertips have come to expect, and that our brains can naturally process without distraction.

I’m not going to look into the biology of touch neuro receptors, or why fingertips cannot differentiate Z-axis from Y-axis vibration of smart surfaces at low frequencies (you can read more about that aspect in “Your Senses Deserve More” by Peter Hall.) Instead, let’s examine a haptics “click” profile and some of the key parameters when designing and optimizing a high definition click. That is a click that can be replicated consistently by specification of the parameters that define a good click experience and providing clear requirements.

First let’s take a moment to understand the touch interaction of a smart surface and how a virtual click will interact with that for the desired UX.

There are two main aspects sensed in an optimized smart surface, virtual button or interactive display: touch and force.

-

Touch information (and pre-touch or proximity/approach information) is most often achieved using a capacitive touch sensing method. Capacitive touch can typically provide a well-defined touch presence and can provide touch location information. Capacitive sensing has limited resolution (and consistency) depending on how much the fingertip itself is pressing.

-

Force sensing augments touch information to provide press information. There are several approaches to force touch measurement, and they all strive to measure repeatably and consistently the force associated with the press of the fingertip on the smart surface or display.

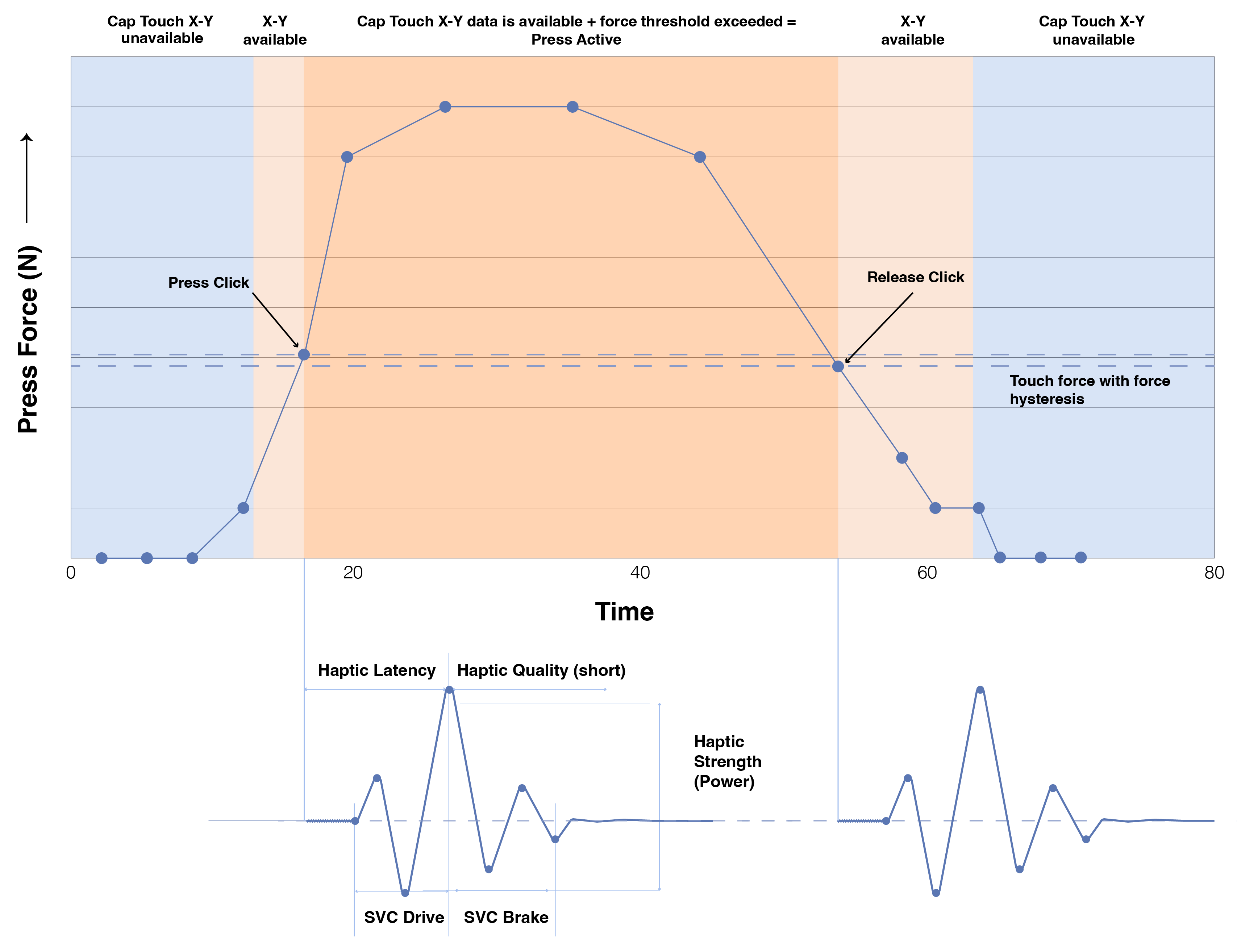

In the diagram below, we break up the anatomy of a click into the distinct phases of the press event. Let’s take a single press event with a virtual single pole contact as our example and learn how we can make a high definition click so the fingertip receptors will feel a click from the solid surface, even though the surface is not moving (or tin-canning) at all.

-

Pre-touch: Fingertip may be approaching the detection area/smart surface but presence/or X-Y location is not discernable. This can be a calibrated threshold or simply because the cap touch information is below the noise floor.

-

Touch: Fingertip is within range (usually touching) the cap touch enabled surface and in case of displays X-Y location is available. The press force is below the chosen threshold. (Including the force hysteresis)

-

Press: The fingertip force surpasses the chosen threshold, and the press is active —the haptic feedback or primary click is triggered.

-

Release: Fingertip pressure is released, and the press force moves below the chosen threshold (including the force hysteresis) — the haptic feedback or secondary click is triggered. The X-Y location is available.

-

Post-touch: The fingertip is removed from the detection area/smart surface and presence/or X-Y location is no longer available.

So, what makes a good click? Several factors come into play. Let’s examine each of these five phases in detail.

The Pre-touch Phase

Pre-touch information can be used to bring shy-tech surfaces to “life,” increase backlighting or wake up sub-systems for the UI. In larger cabin spaces, this also can be achieved with an optical detection system for more directional and distant pre-touch wake up.

It is important to avoid false input events, such as during the brush of a shirt sleeve, on the screen menu, control panel or steering wheel switch.

The Touch Phase

We can be:

-

In transition to a press event and are waiting for the press force threshold to be achieved to the next phase.

-

In a touch phase which stays below the press force threshold for longer than a defined (de-bounce) time period and enter another state whereby the X-Y location is available for panel/display interaction. This can be used to initiate continuous “textured” haptics, in the continuous touch phase providing a feeling (haptic feedback) consistent with virtual surface design consistent with direction or speed of movement (finger drag across the surface) and very useful for “no-look” X-Y navigation of the surface. We do not trigger a press event (“click”) haptic.

-

Momentarily entering the touch phase, then exiting the touch phase, almost immediately as fingertip pressure is removed. This becomes a non-event, and de-bouncing is used to avoid unintentional textured haptics trigger.

The Press Phase

The haptics are triggered based on the lowest latency from exceeding force threshold to peak acceleration.

-

A typical user cannot detect a press to haptic latency below 20 milliseconds (a good click)

-

However, above that latency, the delay becomes perceptible to an increasing number of users, and above 30 milliseconds result in a degraded click experience for the user

The time-to-haptic (latency) is one of the most important parameters to specify when creating a good, high-definition click.

The drive requirements for the haptic will be defined by the actuator type, mass, and ratio of actuator mass to (moveable) surface mass. Closed loop control of the haptic actuator allows a faster acceleration of the actuator mass and shorter time-to-haptic.

To perceive a strong haptic, a typical range of at 3g to 4g (peak to peak) acceleration should be targeted, at the point of contact between fingertip and surface. This is the base haptic acceleration level for good click perception. In a mechanically noisy environment (think vehicle stationary vs. vehicle driving on a rough road), the absolute haptic acceleration required for good click perception will increase, especially if the background vibration is close in the frequency domain.

The realism of the click feel is also determined by the ratio of maximum acceleration to time. Real click will have a high ratio, meaning for a given actuator maximum acceleration, the ability to drive the actuator to its maximum output and then brake the actuator to below a perceptible threshold is key to generating a good click. Closed loop control of the actuator mass is required to optimize fast acceleration to peak followed by fast braking of the actuator mass, which can be achieved either with a position sensor (e.g. hall effect sensor) sensing the magnetic mass, or without the use of an additional sensor by using a derived position measurement such as Cirrus Logic’s Sensorless Velocity Control (SVCTM) feature.

The fast acceleration and near immediate braking of the actuator mass achieved with either sensor or SVC method delivers an individual, high definition click event.

The Release Phase

To transform a great single click event into a compelling virtual button or surface feel, the release phase of the click is equally important. This phase is when the fingertip press force decreases below the calibrated force threshold.

The simplest approach is to replicate the press click waveform, at or around the same force threshold, but on the release phase (force decreasing). To make the click feeling compelling, the latency from force threshold transition to click event must again be minimized for a consistent click feel.

A more advanced approach is enabled when using a smart closed-loop haptics driver such as the CS40L5x family from Cirrus Logic, with onboard haptic waveform memory and algorithms. The release event triggers a different waveform to the press event, which is tuned based on the switch/surface type and click feeling required, having both a different maximum acceleration and frequency profile (texture) than the waveform used for the press event. Using SVC to control the chosen waveform accurately in closed loop, the driver enables an array of compelling virtual click parameters, which are tuned by the HMI designer such that a bespoke high-definition, low latency, and complimentary press and release haptic are realized.

To summarize and specify a strong, high-definition click one may assume the following guidance:

| Haptic Latency (press threshold to peak acceleration) | <20msec |

| Haptics Strength/Peak acceleration range | Between 3 and 4g (peak to peak) based on application |

| Braking (time from peak acceleration to <10% peak) | <15msec |

The ability to break down the press event into phases and dissect them allows us to better understand the anatomy of a click. The time domain relationship between press sensing, acceleration, and braking of haptics actuator mass control, as well as the importance of latency, is very apparent. Whereas the implementation of different single click and continuous haptics waveforms available on-demand in the CS40L5x family with low latency, opens new possibilities for haptics design, especially when delivered with the power and consistency of SVC.

Touch is not the forgotten sense in Automotive UX! Everything that has been learned in other industries drives the proliferation of smart surfaces and touchscreen displays and can be applied to our automotive HMI challenges, including the use of high-definition haptics.

The fact that we know and can instinctively feel through our fingertips what feels right and what feels wrong, is one of the foremost examples in the world of haptics today. Embodied and illustrated with the example of the good and bad “click” experience.

As more of our world becomes virtual, the ability to specify, design and implement HMI solutions with intuitive, compelling touch using high-definition haptics is a part of the immersive and personalized user experience customers expect.

With the latest breakthroughs in haptics driver technology embodied by the Cirrus Logic CS40L5x family of devices, high-definition textured haptics and delivering an industry leading “good click” is well… at your fingertips.